Tech Access Project

Summary: Bertman Gross coined the term information overload in 1964 - “Information overload occurs when the amount of input to a system exceeds its processing capacity. Decision makers have fairly limited cognitive processing capacity. Consequently, when information overload occurs, it is likely that a reduction in decision quality will occur.”

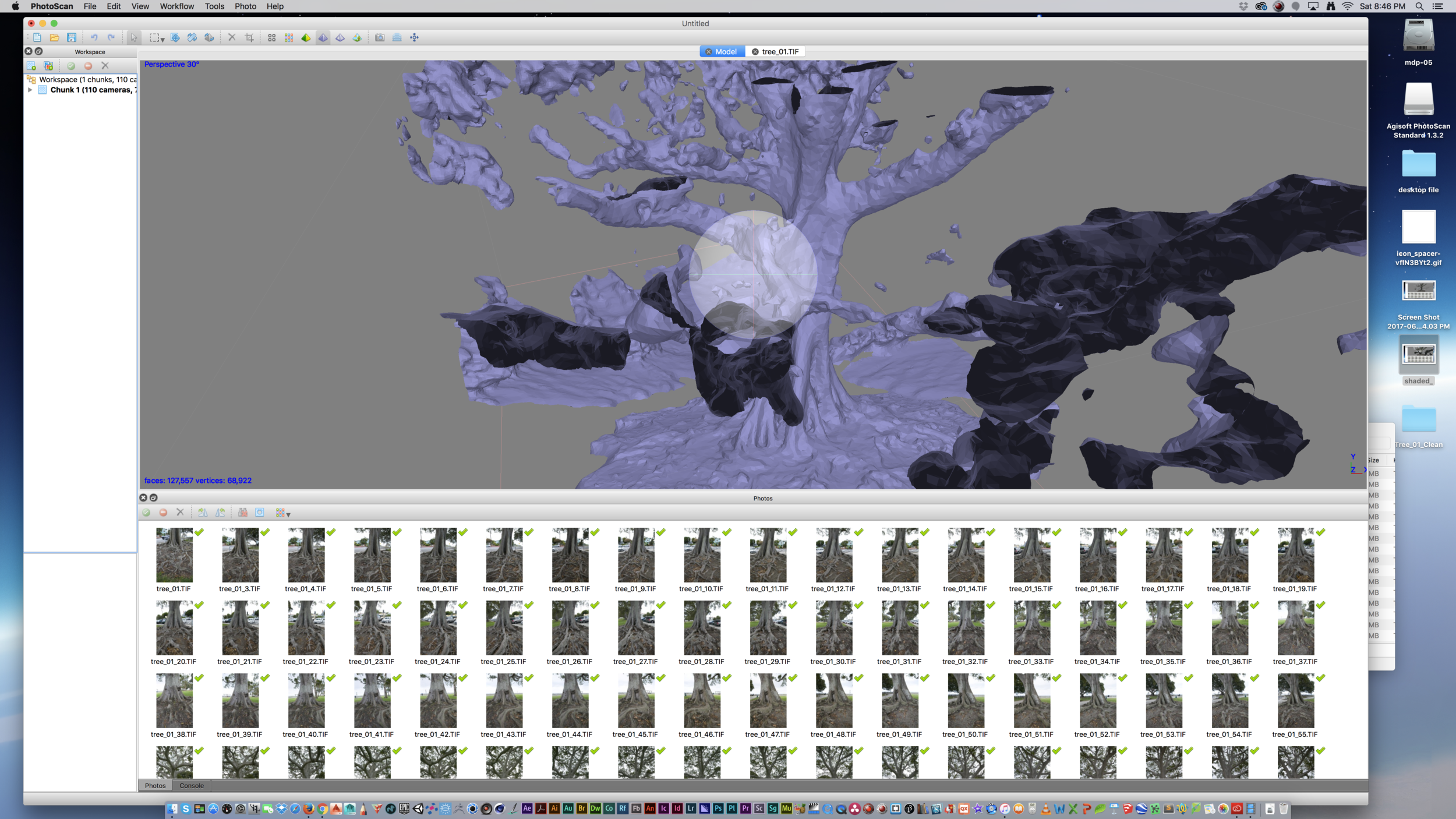

Our protest topic was tech access. Many of the conversations talked about the limits in accessibility to VR, AR and AI design tools. Noting that much of the easy to use, free cross platform software for scanning, modeling and baking (such as remake, Reality Capturing: 123D Catch, etc ) has been discontinued and is no longer available. Much of the existing free software is quite complicated and not easy for beginners. While we found this topic concern and interesting, we found the topic of too much tech access of equal interest. Many people in our group feel that we should address inundation of information as an issue in the tech access.

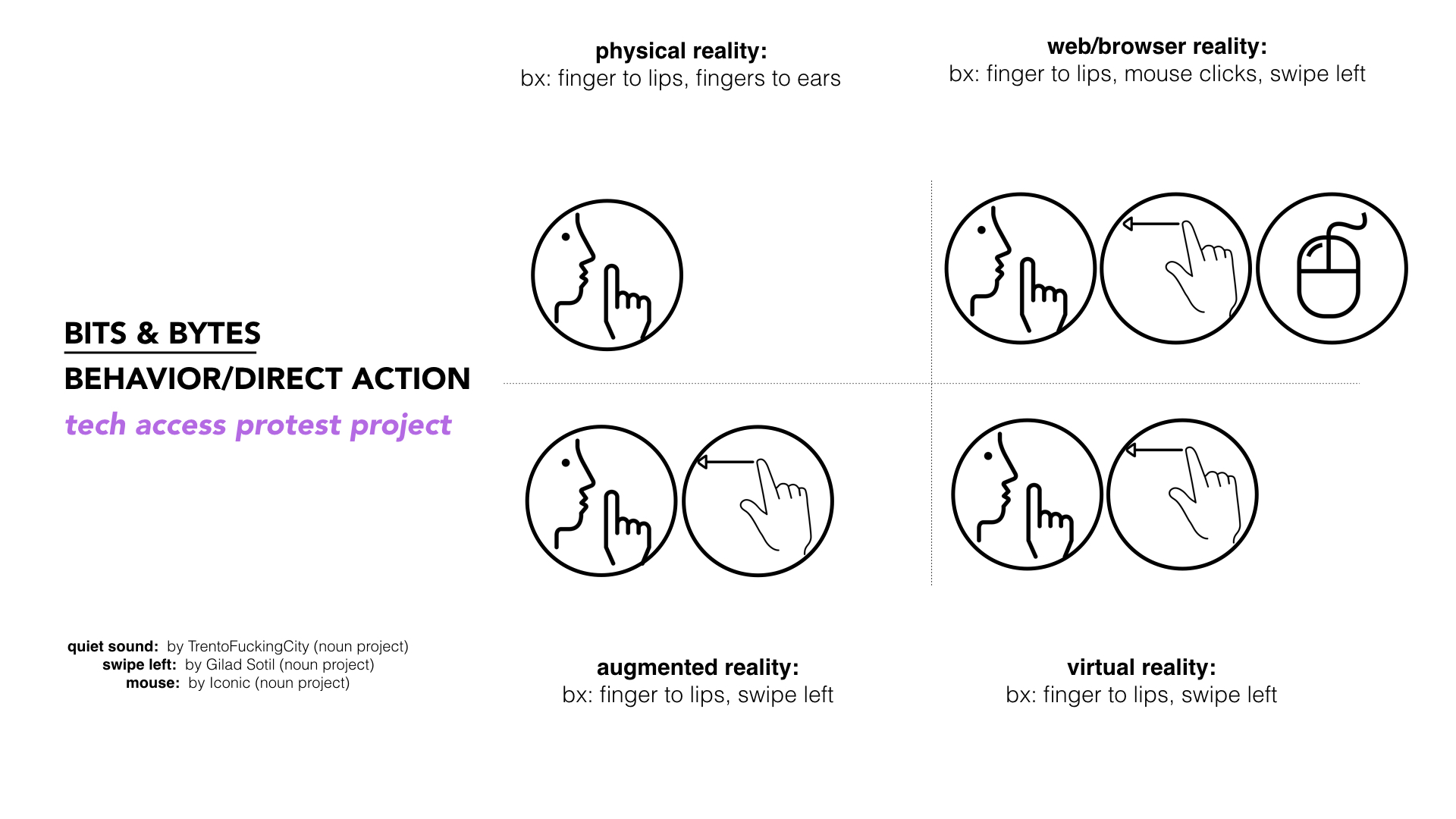

So we came up with a very simple solution and pulled the existing “swipe left” (and click) behavior into the physical and virtual worlds. The swipe left is our protest to swipe information away and make it disappear. Forever.

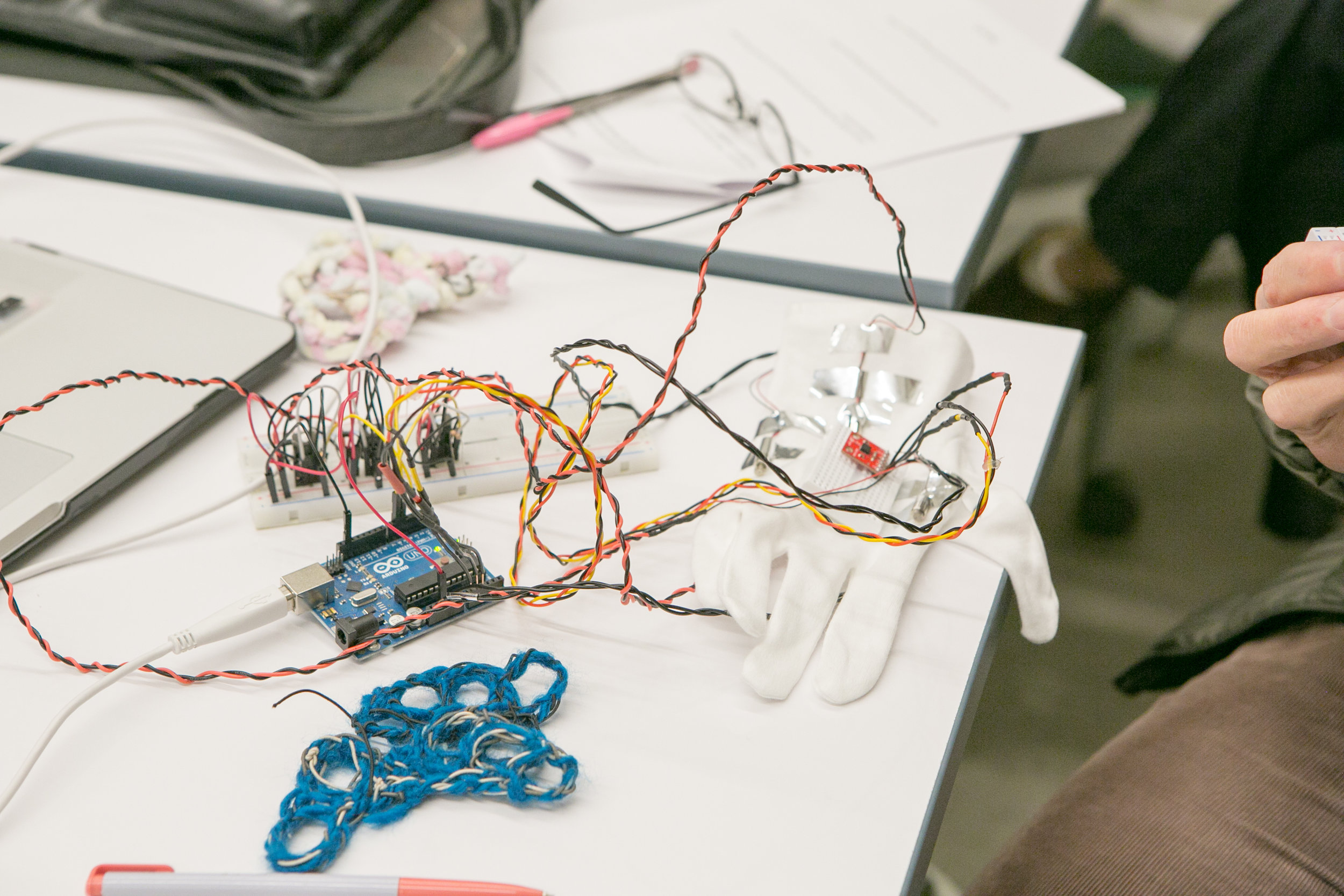

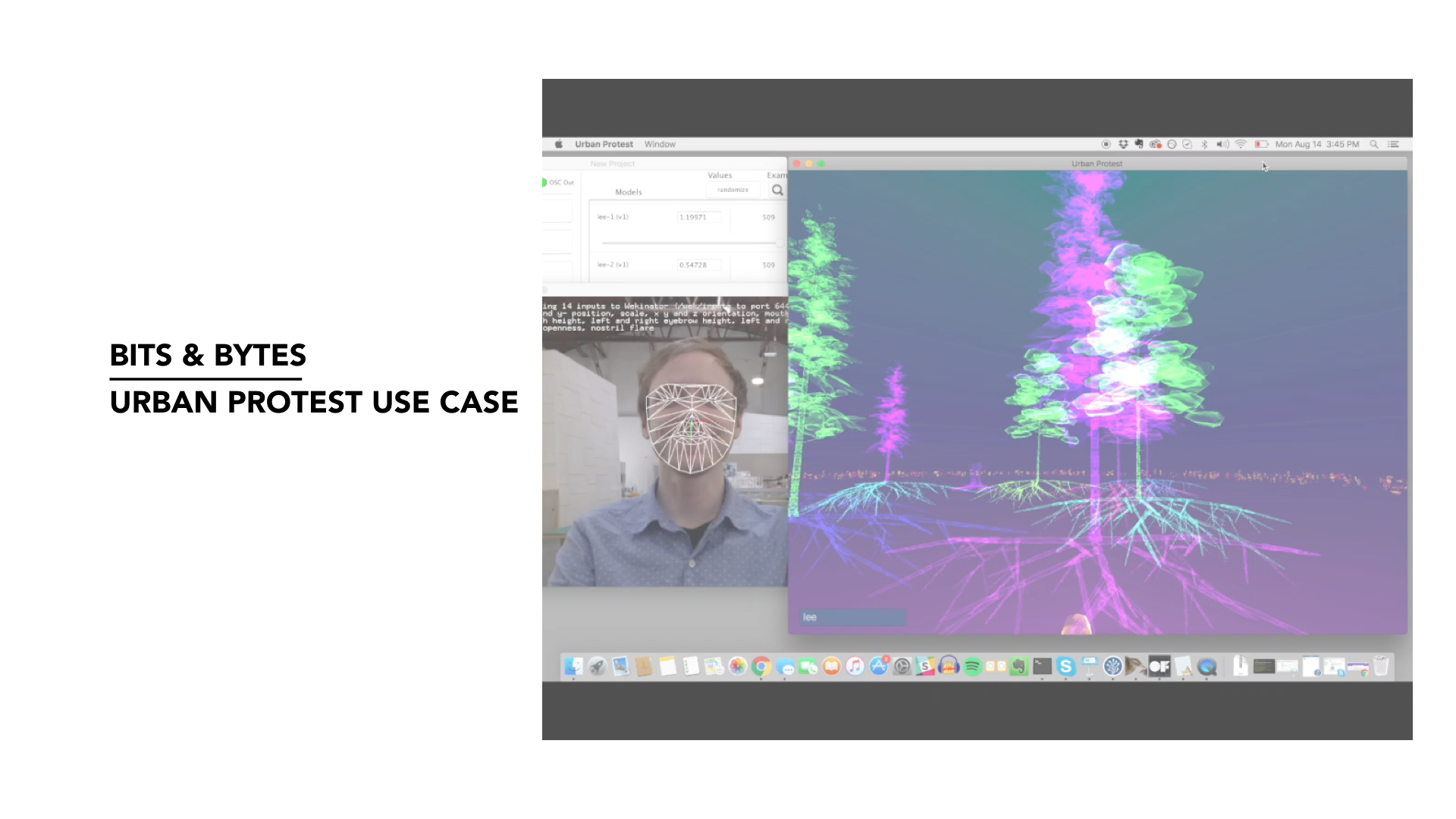

We decided that for the desktop experience we would use a series of mouse clicks to remove unwanted info. Each person would use the machine learning tool wekinator to train their own computer to remove information such as tweets and spam emails, when a certain number of clicks are reached. We thought about using patterning matching in a more precise way, where each clicking behavior removed a specific categorization of media. So all the spam would be a triple mouse click. The user could then go on to personalize their own information removal. One member suggested that a quadrupleclick would only remove all tweets she received that contained the word boyfriend.

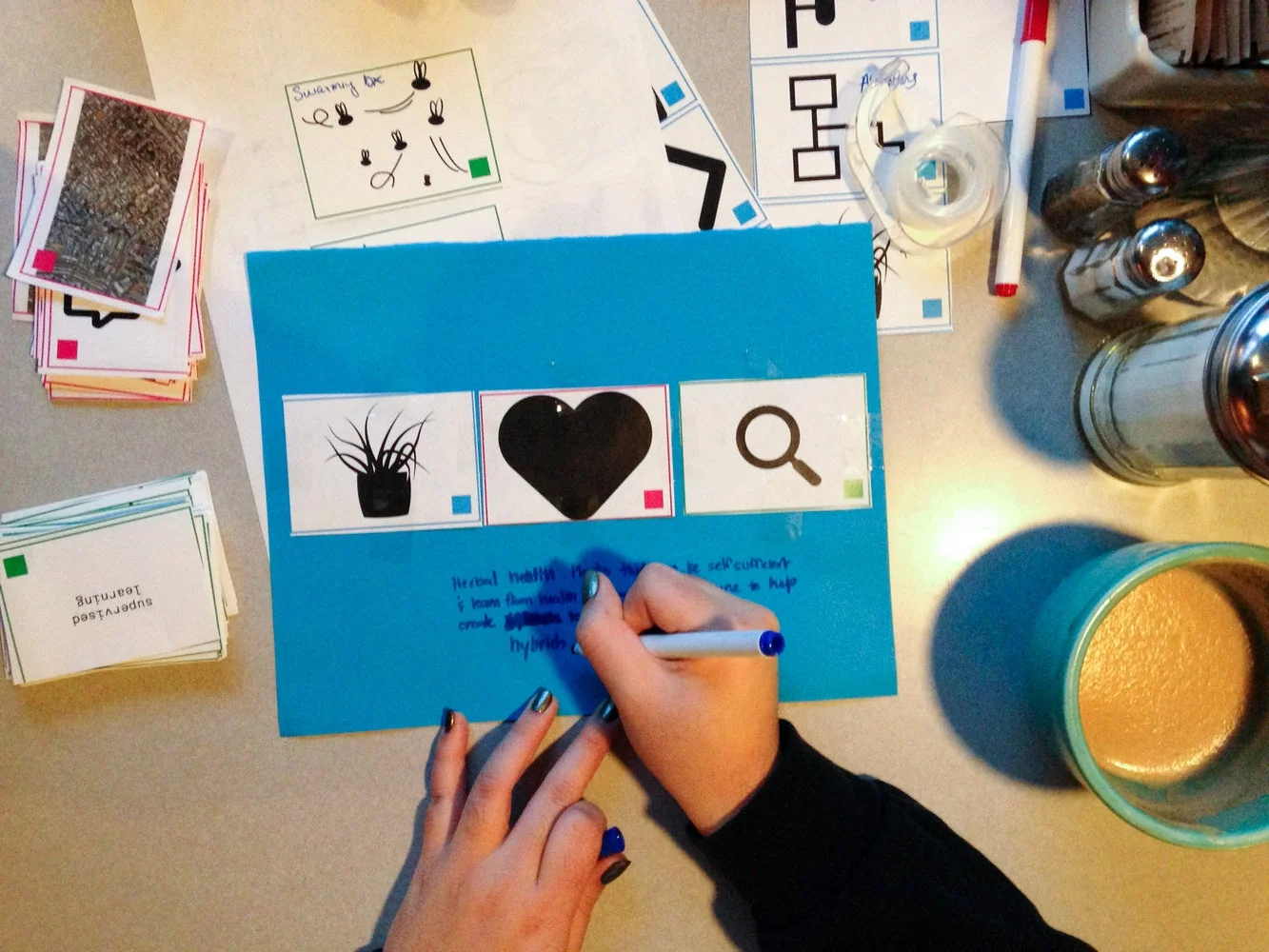

We sketched this desktop information removal approach using processing.

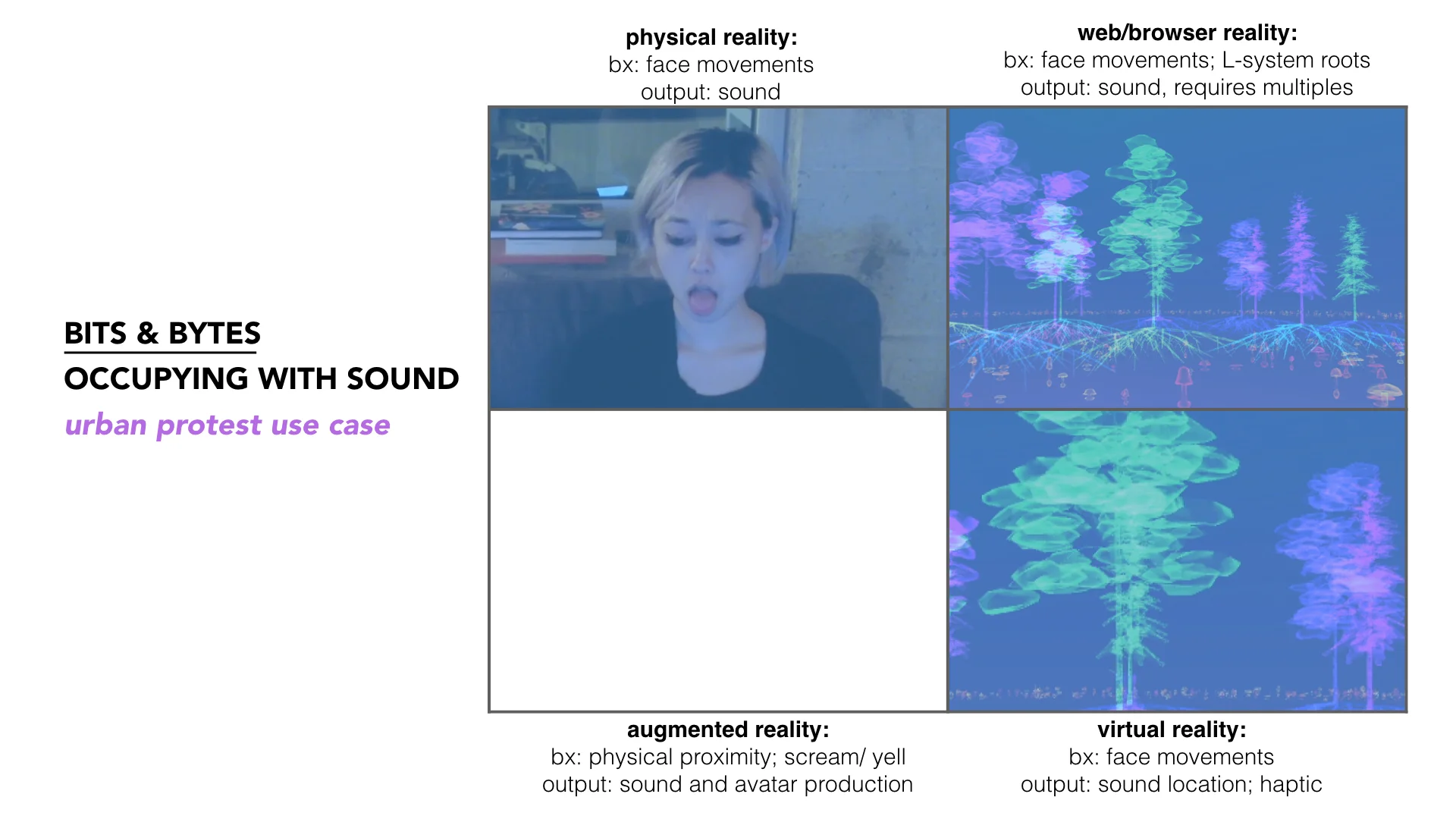

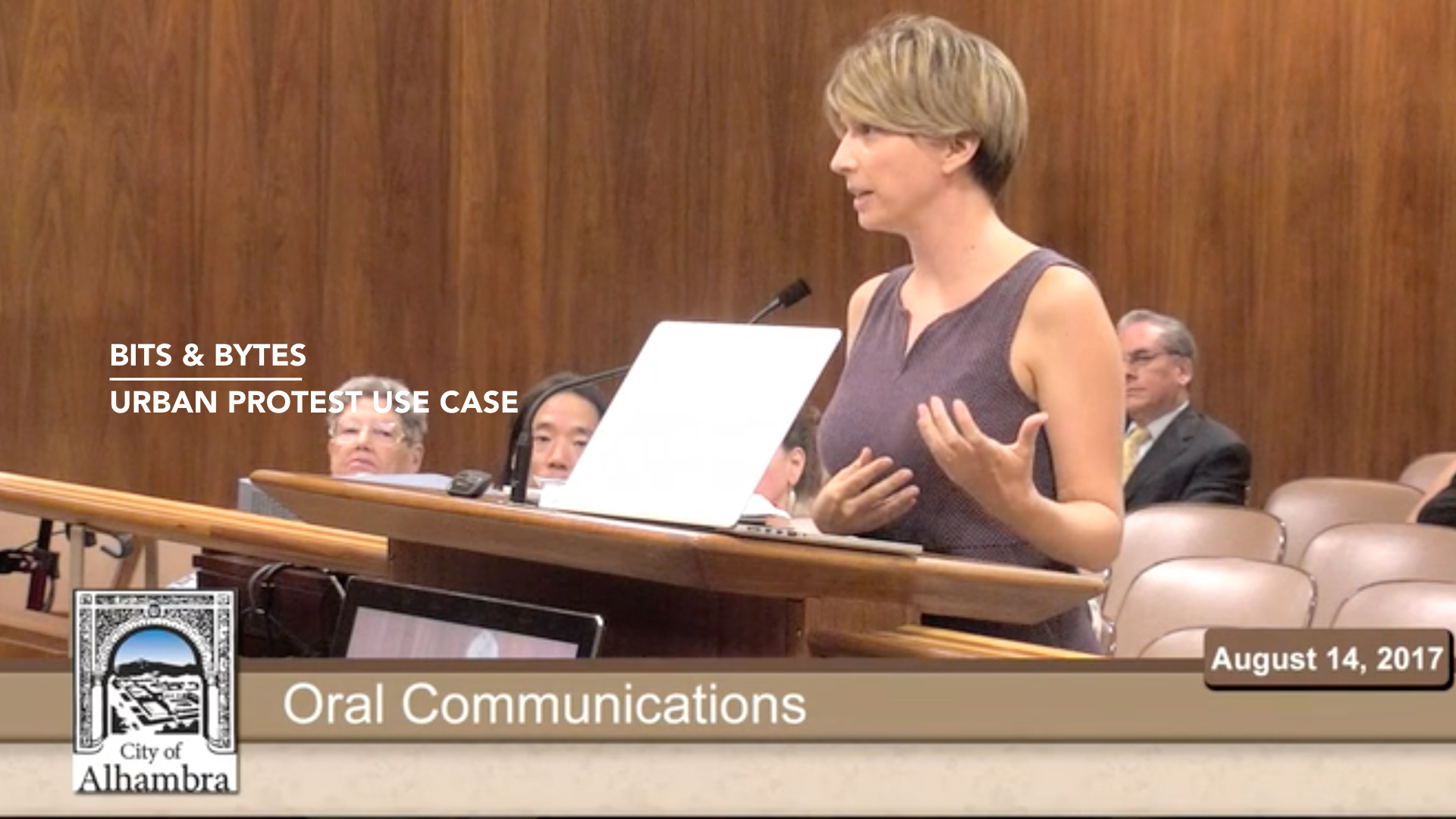

Our next step was to take this concept and apply it to AR and VR. For the Augmented Reality information removal experience we proposed to use the Kinect (and wekinator) to recognize hand gestures which would remove information. One hand gesture was holding your finger up to your lips in a “shh” manner. This behavior could be used to remove very specific information, or medium of information. We decided that she shh behavior would remove all audio files and that a physical swipe left could remove more general information like spam. We saw this as an opportunity to engage in a virtual experience to have fun with a pretty menial task, like sorting emails and deleting media.

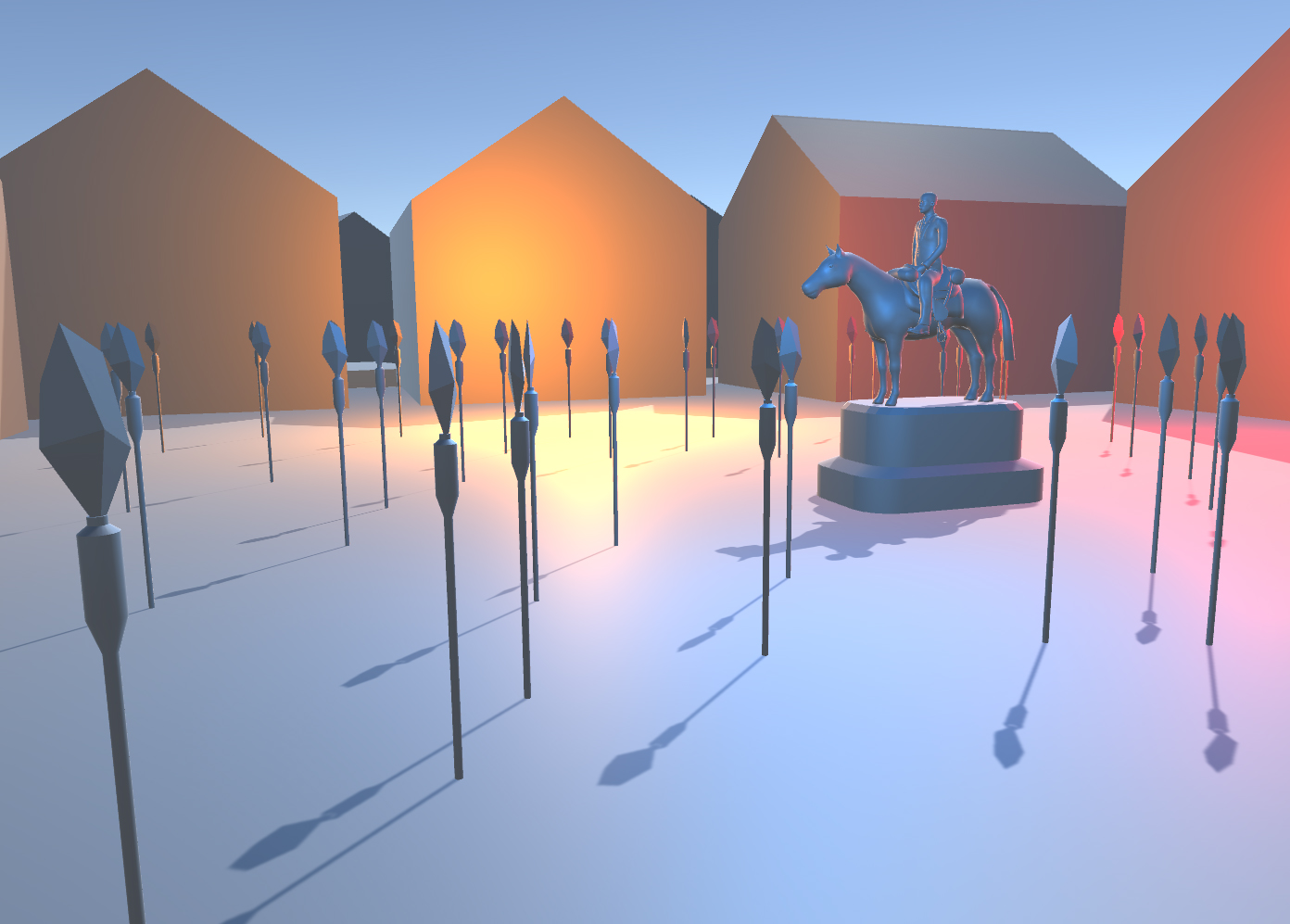

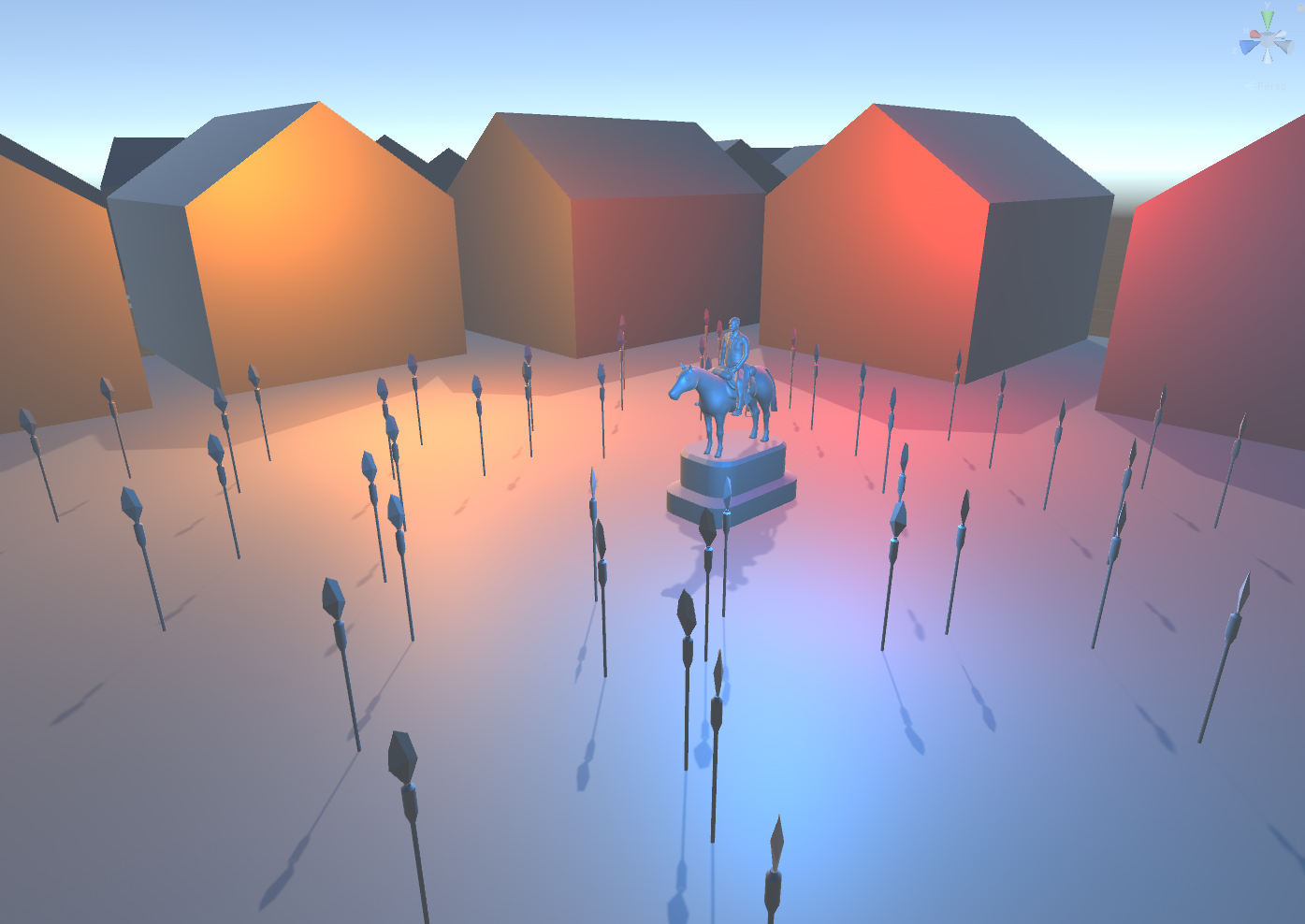

We sketched our VR information removal in Unity, where we created blocks that were designed with tweets, which were moving and located on different planes.

We also talked about using the shh behavior in Augmented Reality, by making our information appear in a 3d form, but using the finger to lips to remove specific types of information on the phone. We also had fun with this idea about behaviors across spaces and thought it would be interesting to have on behavior in one environment, remove a specific type of information but that same behavior might trigger another type of information to be removed in another environment. So a swipe left behavior in AR might resulted in tweet removal, whereas a swipe left in augmented reality might remove spam.

Lastly, we’d like to note a funny thing that happened in the prototyping -we actually lost our unity sketch to explain the concept which is pretty funny considering our topic. We thought about making this a performance piece.

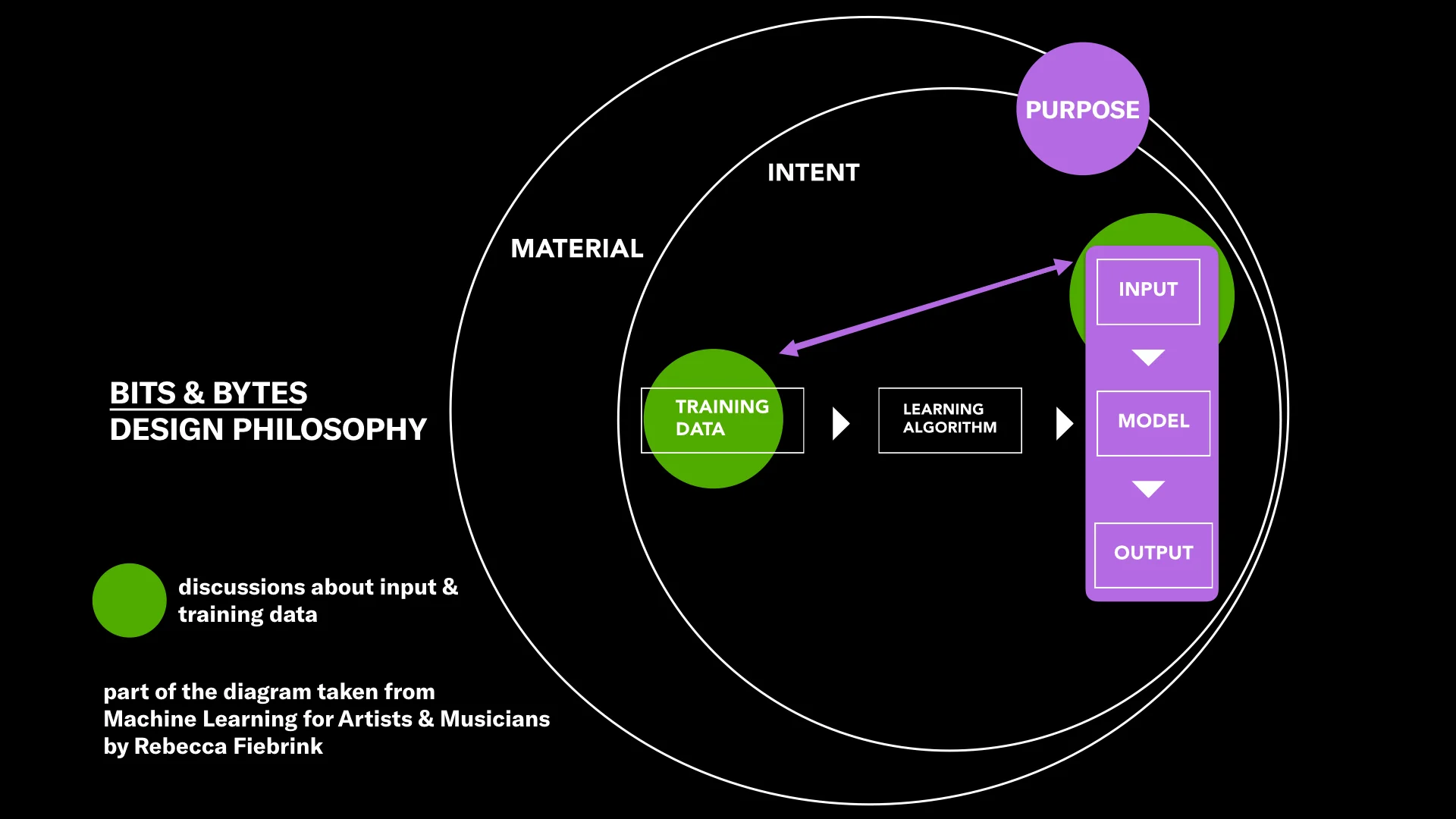

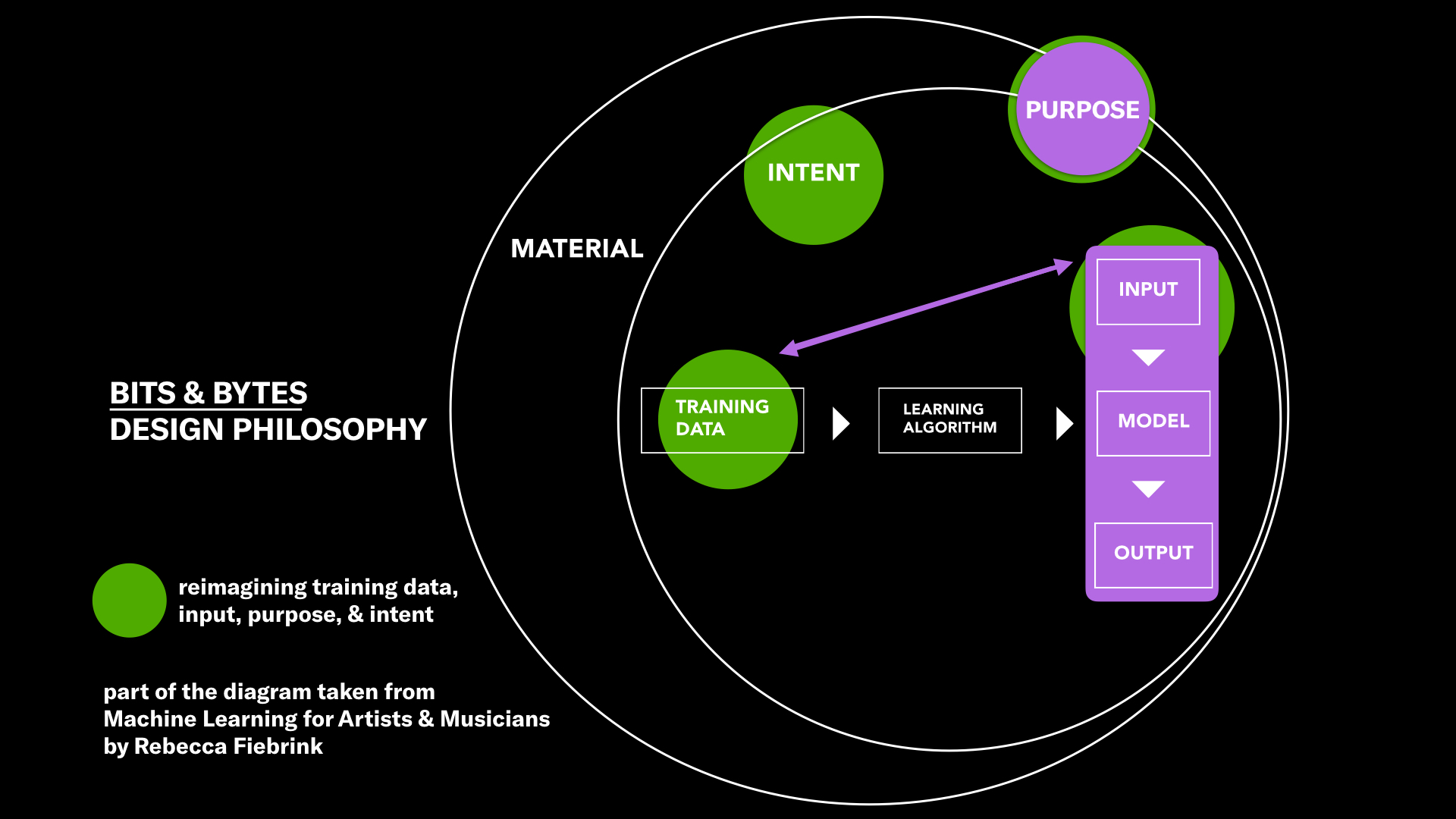

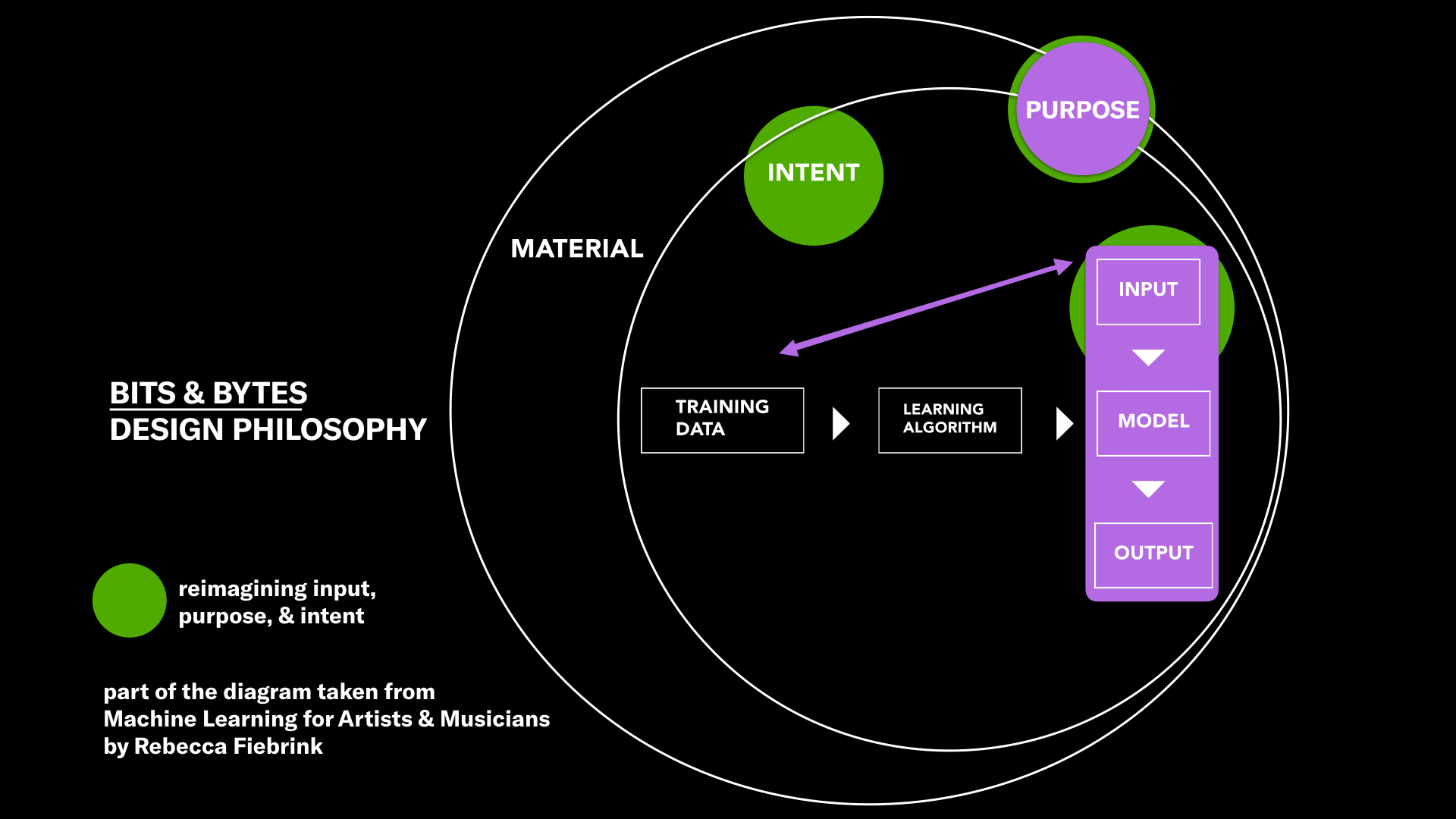

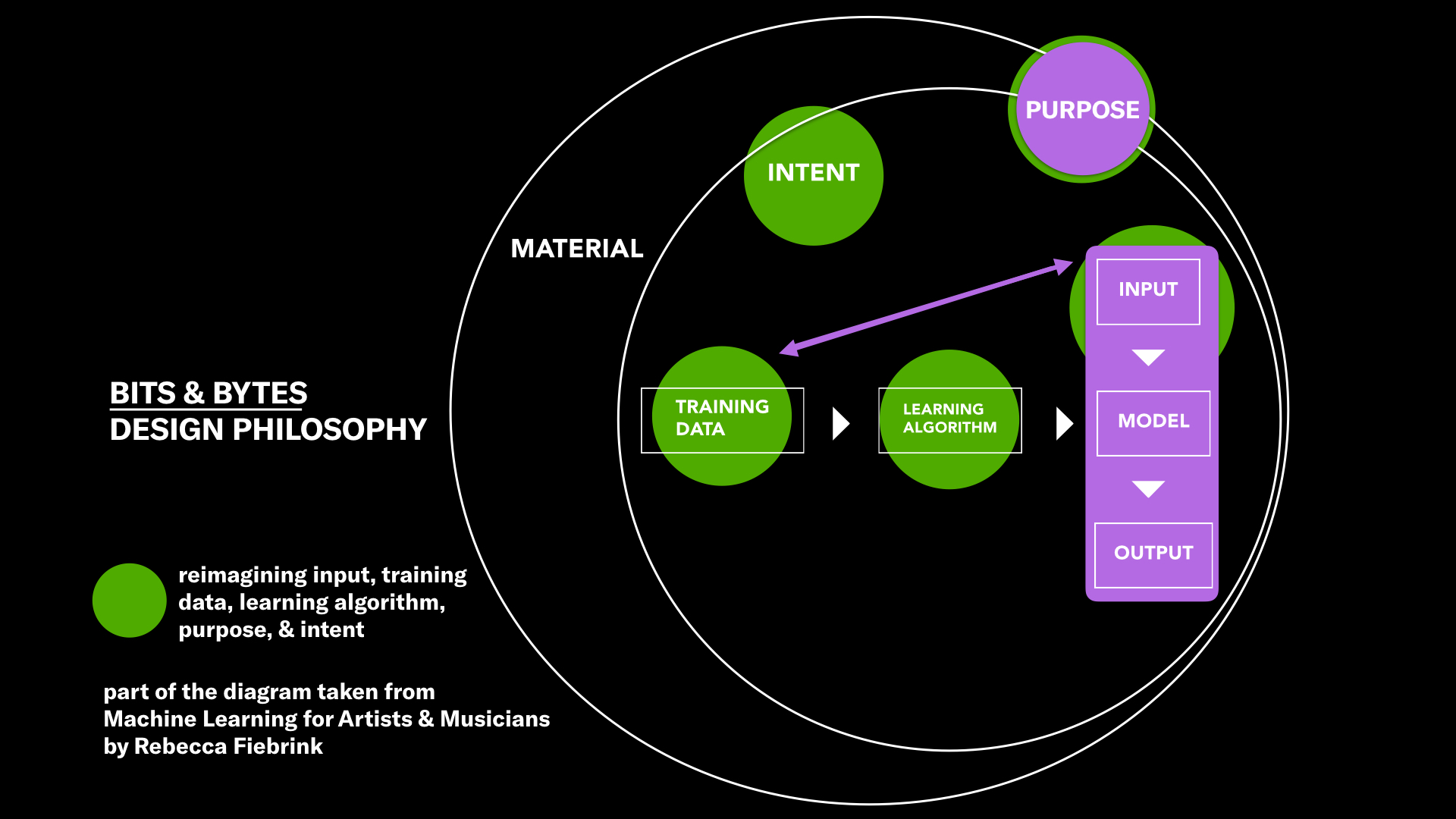

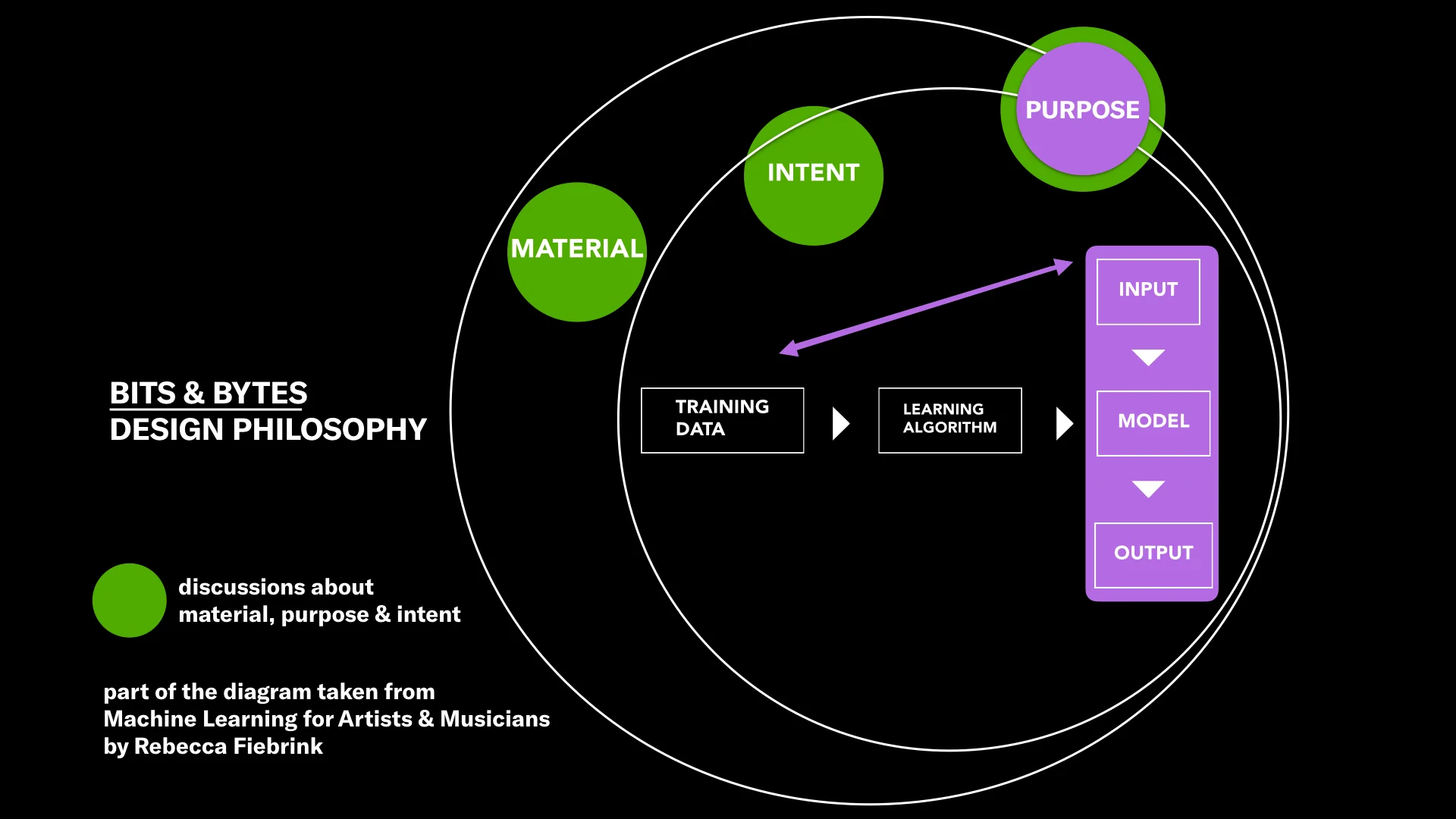

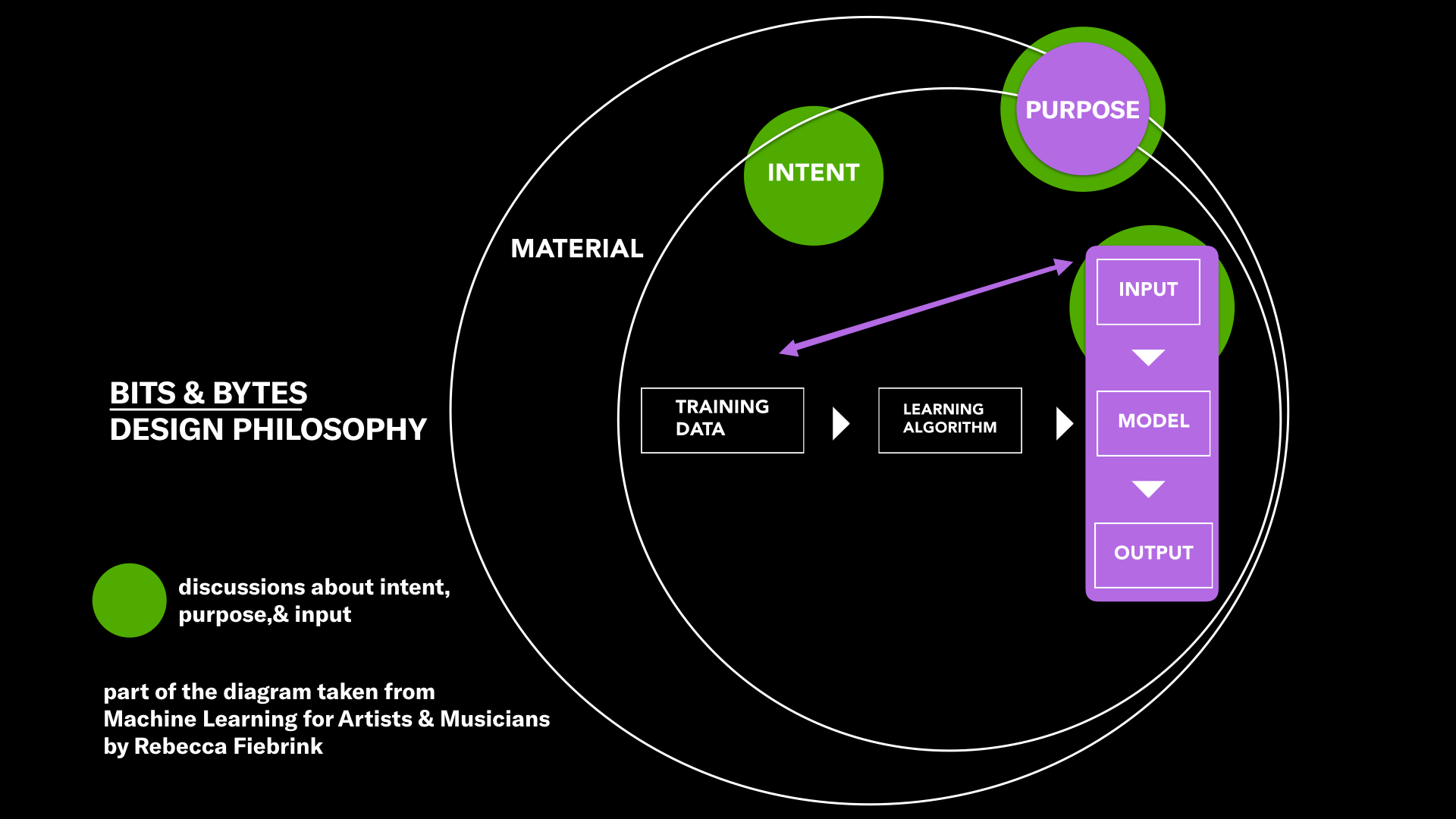

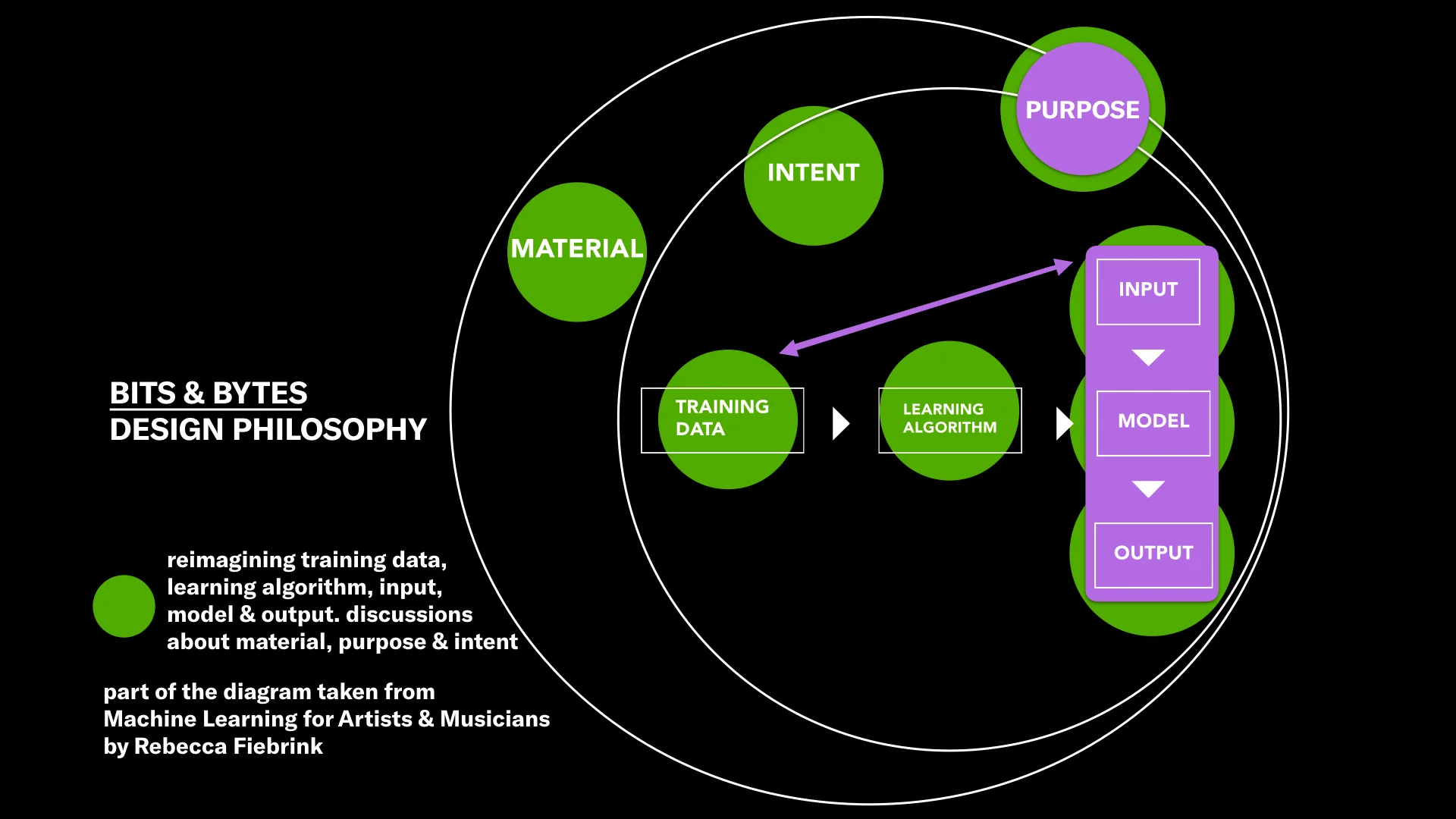

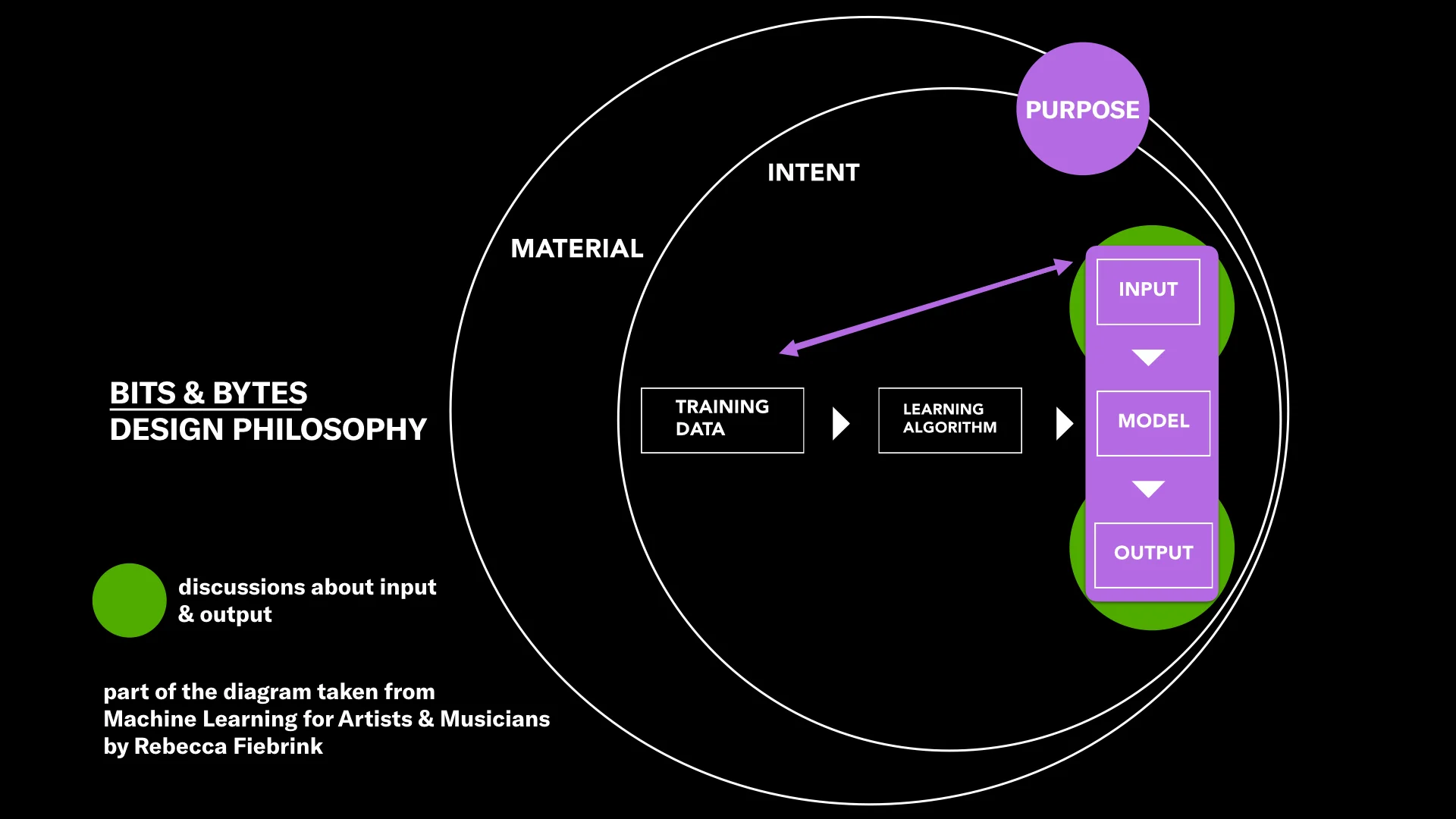

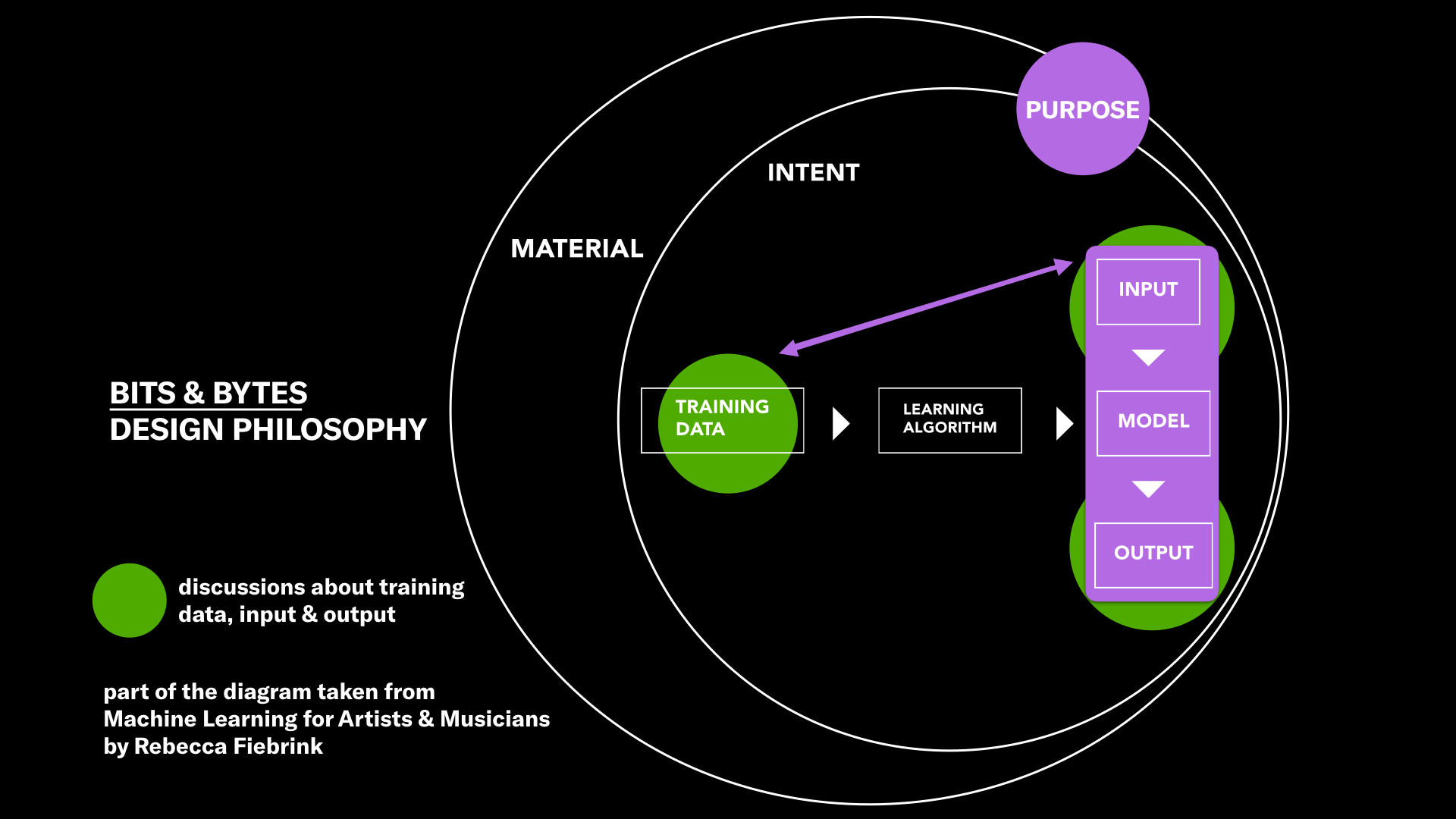

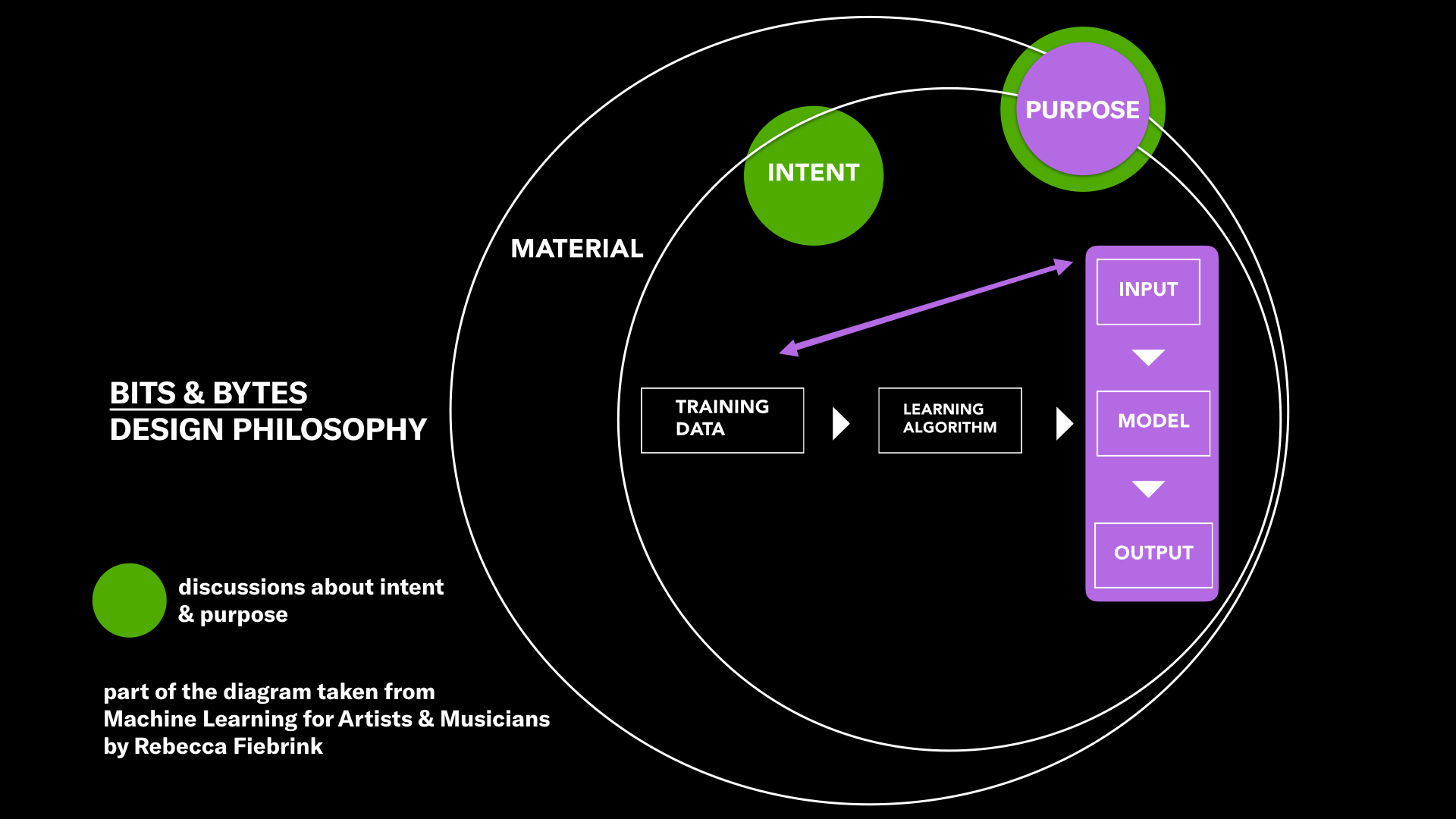

Primary AI design tool = Wekinator, created by Rebecca Fiebrink. The Wekinator receives inputs and then process them using computational functions called models, and those models then produce outputs. These models are built by using supervised machine learning algorithms.

Assets (in unity - ideas about prefabs/ models, avatars and materials)

* text bubbles

* audio files

Environment: This depends on the space. In VR, the user would be on a plane and the surrounding environment would be a solid color or have a space background.

Behavior Design: The behavior we designed for was protest, and we suggested mouse clicks, swiping left, and bringing a finger to the lips in a "shh" action. These behaviors varied across environments, but the intent was to protest.

Inputs

Camera (Webcam or Kinect or leap), mouse, screen

Outputs

* Removal of audio files.

* Removal of bubbles / cubes in Unity.

Data: We disregarded the data provided and decided that we would train on our own data, using a personalized AI tool to train that data.

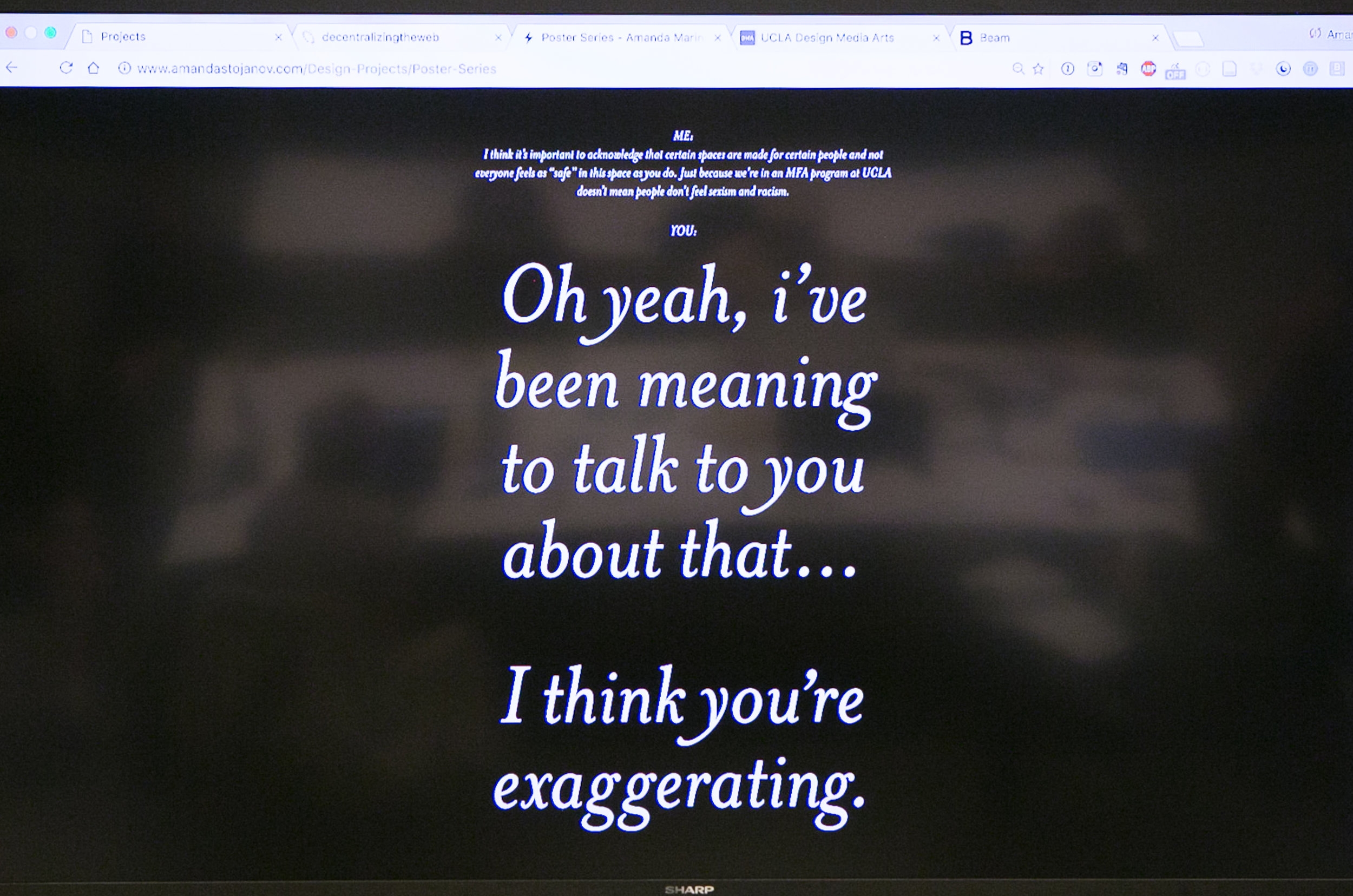

Definition of Protest: ather than engage in discourse with information, we are removing information and not directly engaging with the content. We have trained our AI to identify which content to remove in which environment.

Which reality? We wrote about designing for four realities.

AI Design Team: Debra Do, Astha Vagadia,